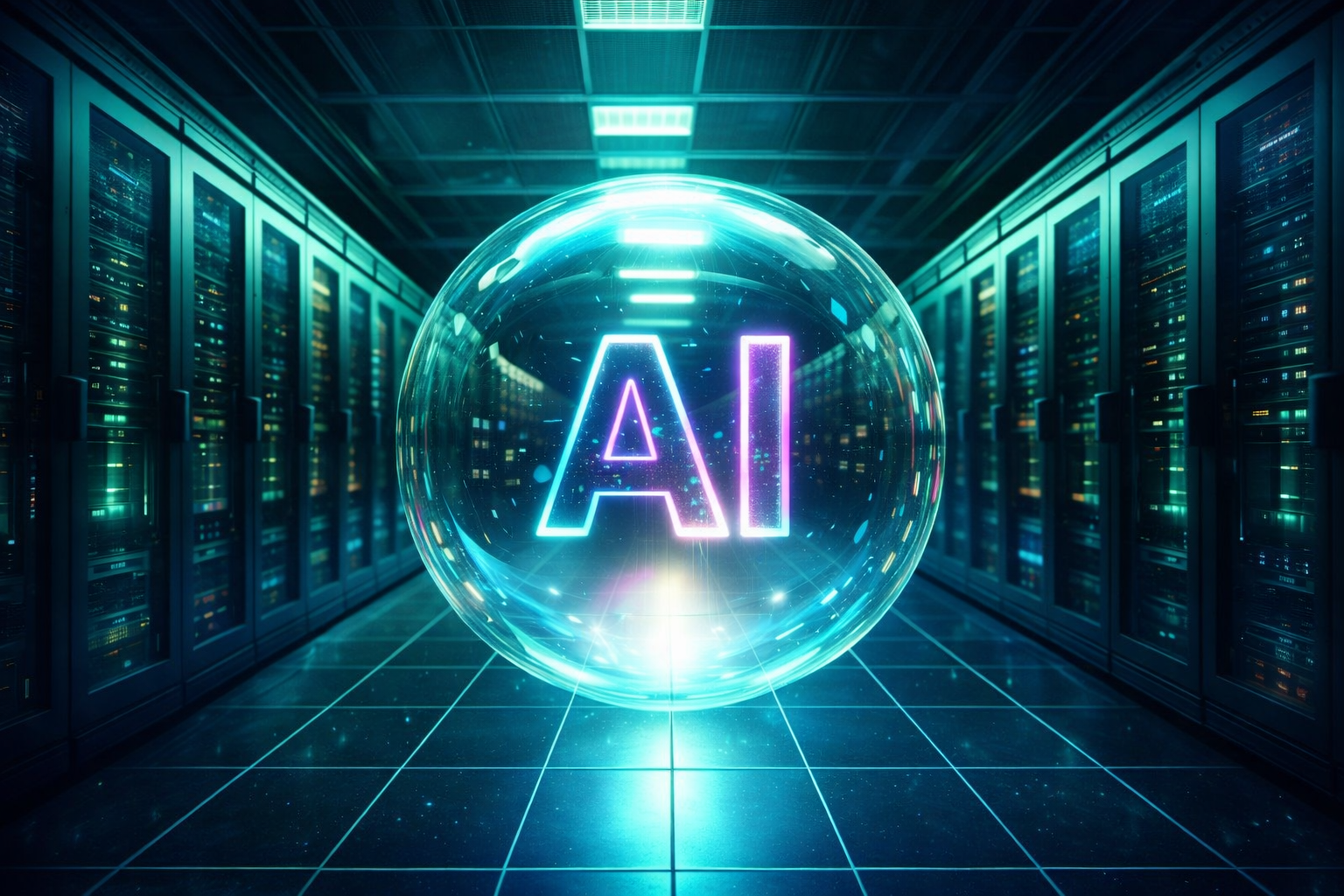

So it looks like ETH Zurich, EPFL, and CSC will be deploying a fully open-source large language model (LLM). It was trained on Switzerland’s “Alps” supercomputer and set to debut in “late summer 2025”. Why this matters Full transparency &

So it looks like ETH Zurich, EPFL, and CSC will be deploying a fully open-source large language model (LLM). It was trained on Switzerland’s “Alps” supercomputer and set to debut in “late summer 2025”.

Why this matters

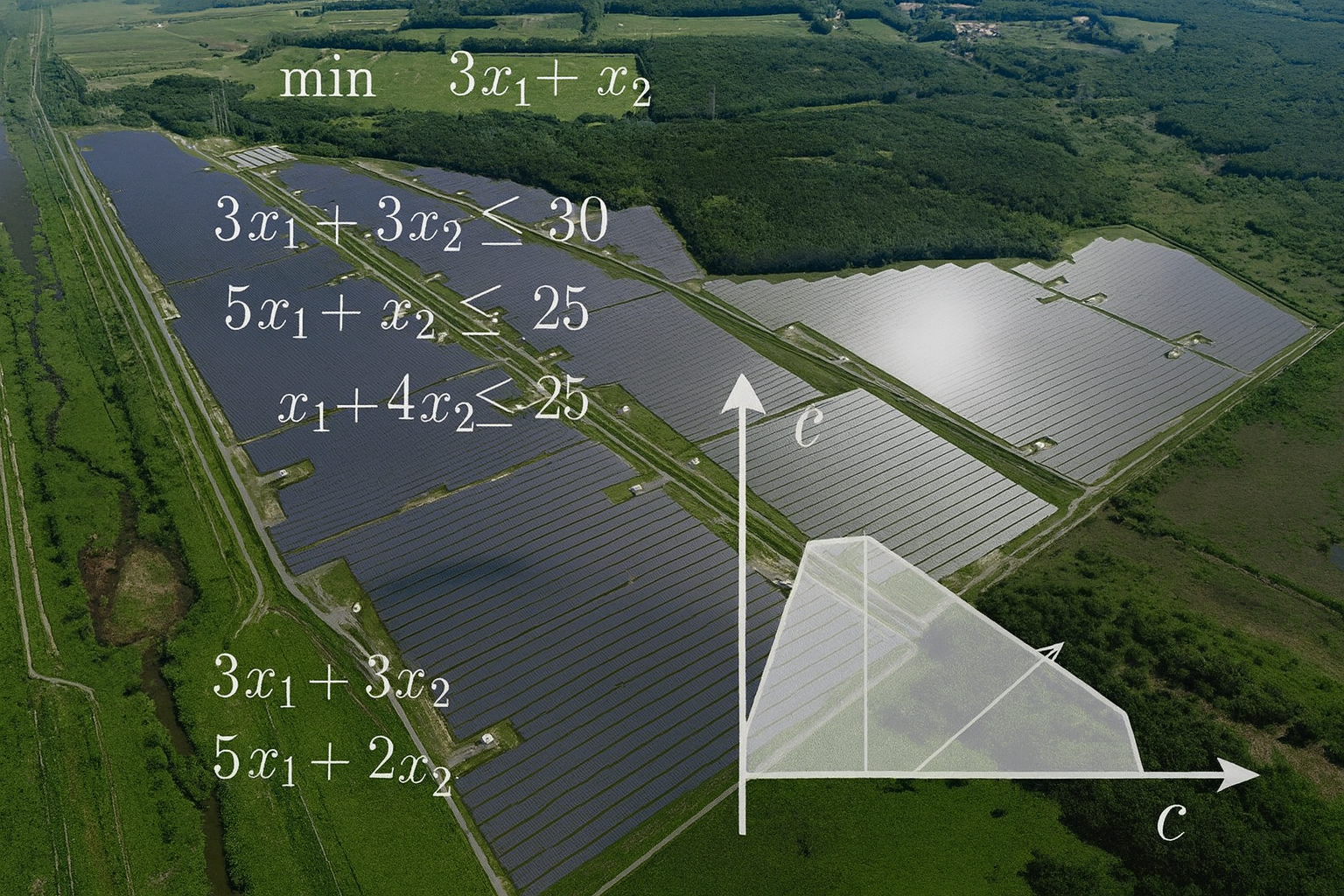

- Full transparency & accessibility – All model architecture, weights, and training data are released under an open license, enabling global scrutiny and collaboration across academia, government, and industry.

- Truly multilingual – Designed with global inclusion in mind, the model supports fluency in over 1,000 languages, trained on diverse datasets (approx. 60% English, 40% non-English).

- Scalable for diverse needs – Offering both 8B and 70B parameter versions, the model balances performance with accessibility—70B places it among the top-tier open models globally.

- Powered by sovereign infrastructure – NVIDIA and HPE’s support via CSCS’s Alps highlights the power of public–private collaboration in securing infrastructure independence and innovation

Trustworth and Democratised AI

This marks a pivotal step toward trustworthy and democratised AI — we know what it was trained on and we can poke into its internals. Most of all it proves there are multiple ways to approach technology development.

Here in Full Stack Energy we look forward to applying it to some domain specific problems. The open nature of this LLM will be of critical interest to our customers in this space.