It’s not that the data isn’t there. It’s that getting to it, meaningfully, rapidly, and without an interpreter, is still surprisingly hard.

People don’t want another analytics dashboard, and they don’t want to learn SQL. They want answers. They want to ask questions in plain language and get useful, accurate replies. That’s where conversational interfaces come in.

By integrating natural language interfaces powered by large language models (LLMs), we can remove friction, surface insight, and empower teams to make better decisions and all without needing a data science degree or a tutorial video.

The Interface is the Intelligence

A conversational interface isn’t just a novelty layer for users to type into. It’s a new kind of access. Done right, it interprets not only what the user asks, but why—and delivers output that’s tailored to their role and their context.

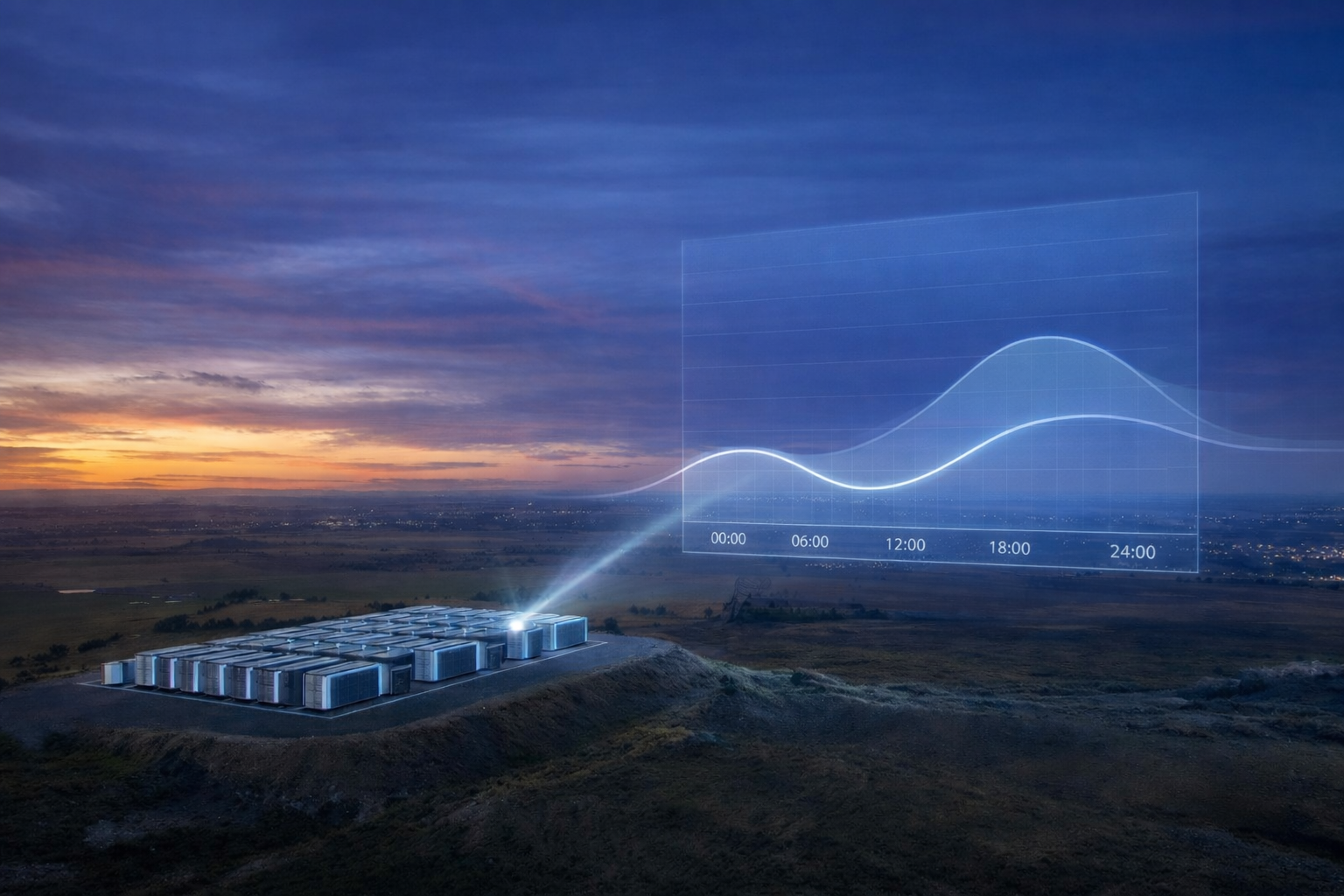

Someone in operations might ask, “Has our baseload shifted since the last demand response event?” while a commercial manager wants, “How much did we save last month from battery arbitrage?” Both are valid. Both are specific. And both, in traditional setups, might require navigating three systems and emailing someone for a report.

Now, they can ask and get an immediate, structured, and relevant answer. It’s not just convenience. It’s strategic acceleration.

Democratising Access Without Dumbing Down

Conventional tools put the burden on the user. Dashboards must be configured, filters tuned, reports drilled into, and spreadsheets downloaded. None of this is hard in isolation, but it is slow, can be distracting, and puts off the very people who need the information the most.

Conversational interfaces eliminate this overhead. They allow users to ask for what they need in the language they use day to day. This doesn’t mean dumbing down the data. It means reframing it.

Engineers can query raw figures. Executives can ask for summaries or trends. Support staff can request “the last 5 maintenance issues tagged as urgent.” One interface, many styles of interaction and each adapted to the user’s needs.

It also lowers the internal knowledge barrier. If only one or two people in your team know how to “get the data,” then that’s a bottleneck. With a conversational system, that expertise is encoded, discoverable, and available to anyone who needs it.

Full Stack Energy’s approach is built around grounding large language models in your real-world data. We don’t rely on generic web-trained models making educated guesses. Instead, we use Retrieval-Augmented Generation (RAG) to combine the reasoning capabilities of modern LLMs with your internal knowledge base: time series data, document stores, PDFs, reports, logs, databases, and more.

This approach ensures every answer can be traced to its source. No hallucinations, no vague generalisations. When a user asks, “What were the top three causes of HVAC faults at Site 7 last winter?”, the response is built from actual maintenance records and not extrapolated nonsense.

It’s explainable, auditable, and grounded. The intelligence doesn’t replace your data, it reveals it.

Behind the scenes, conversational interfaces rely on a powerful architecture.

- Vector databases enable semantic search across unstructured data.

- Embeddings capture the meaning and context of documents and queries.

- Time series databases allow fast, scalable querying of high frequency energy and sensor data.

- LLMs translate human language into a structured and understandable format.

But your users don’t need to know that, they just need it to work. The point of the interface is to make the power of your data infrastructure accessible in plain terms.

Flexible, Integrated, and Secure

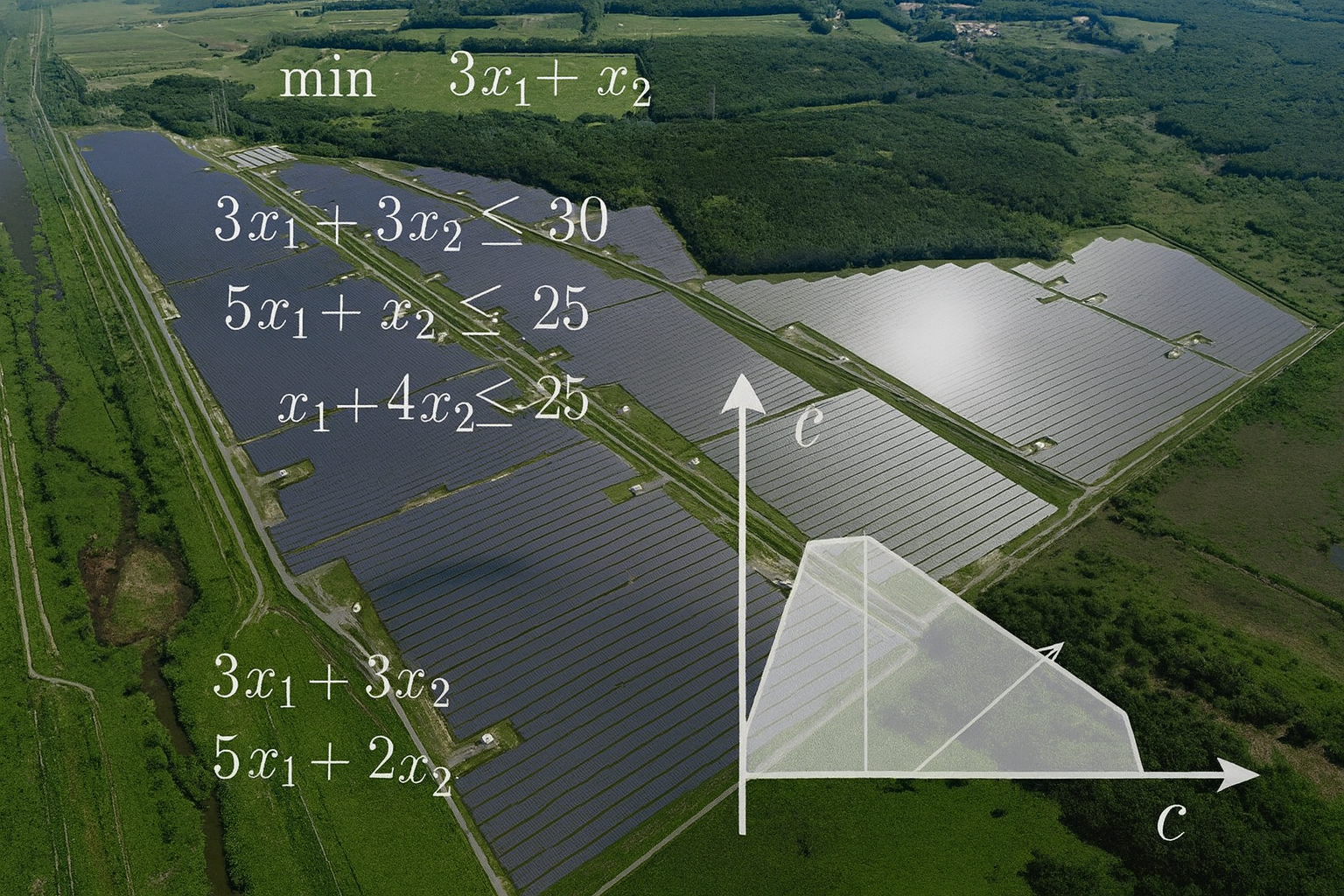

At Full Stack Energy we don’t offer one size fits all platforms. We build systems to suit how you work. The conversational layer can integrate with existing systems, whether that’s your SCADA, EMS, InfluxDB, PostgreSQL, or something older and altogether weirder.

It can be deployed on-premises for full data sovereignty, or in the cloud with secure access layers. We support edge deployments where local inference is needed, and hybrid setups where retrieval and reasoning happen close to the source.

Most importantly, the system evolves. As your data grows and your questions become more complex, the model’s understanding grows too. This isn’t a static tool, it’s an intelligent service that adapts with your business.

From Friction to Flow

The true power of conversational interfaces isn’t that they answer questions. It’s that they unlock curiosity.

When users can interact with data the way they interact with a colleague, for example asking for follow ups, clarifying, or digging deeper then they start to ask more. They spot patterns. They challenge assumptions. They turn data into insight, and insight into action.

The result is faster decisions, broader access, and less time spent waiting on reports or reverse engineering a dashboard filter.

Conversational interfaces don’t replace your existing systems. They sit on top of them, cut through the noise, and let your team focus on what matters.

At Full Stack Energy, we don’t just bolt on a chatbot, we build intelligent, grounded systems that give your data a voice. And that voice speaks your language.

What would your team discover if getting answers was as easy as asking a colleague?

Stop filtering, drilling, and waiting—start exploring, challenging, and acting. Your data already holds the answers.

It’s time to start the conversation. Let’s talk