Understanding the Black Box Nature of AI

The term "Black Box", with respect to AI, refers to the inherent property of current machine learning models in that the decision-making process is opaque to, or not easily interpretable by, both developers and operators. These models, most especially deep learning neural networks, may often yield highly accurate results, but unfortunately offer little insight into how those results were achieved.

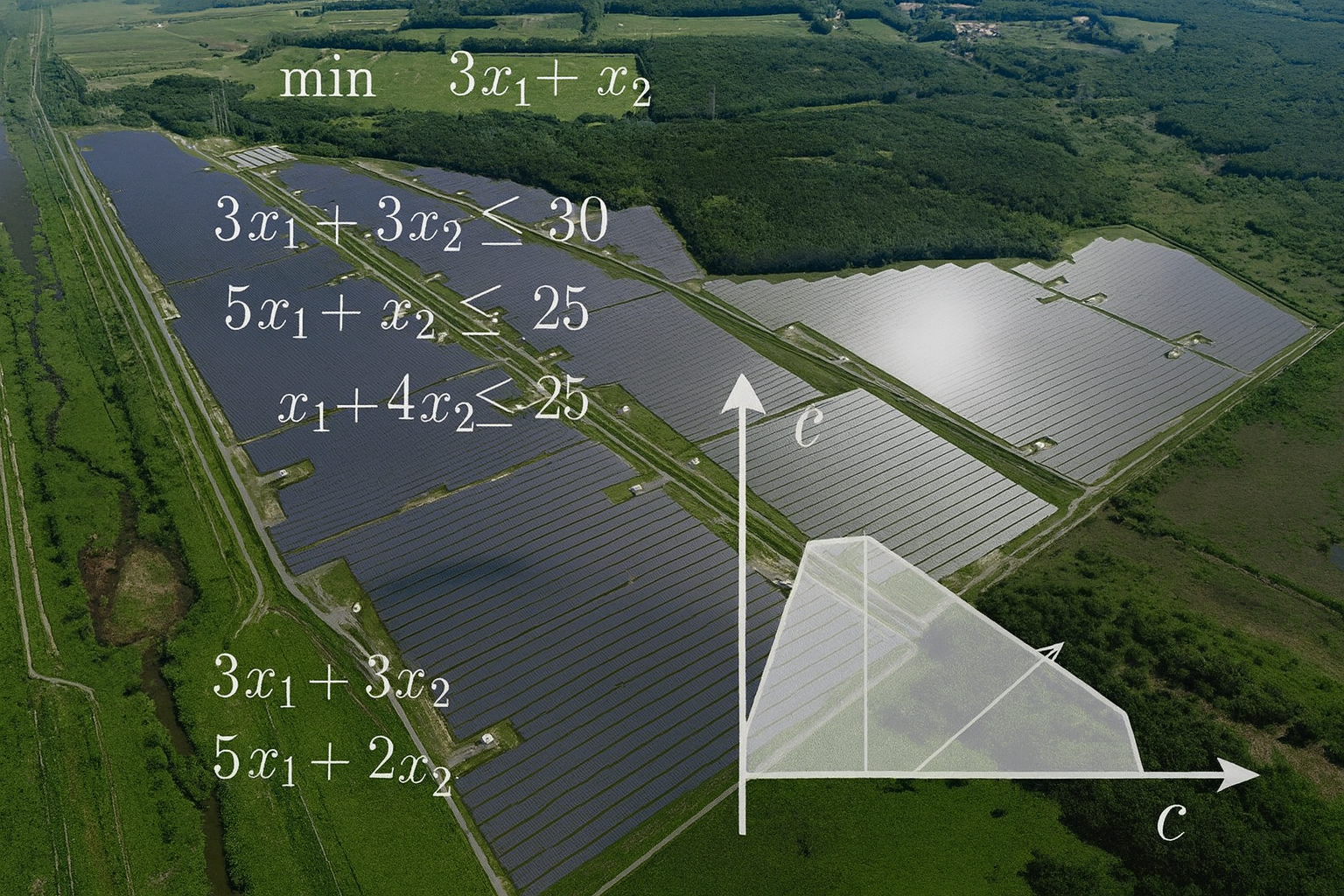

The Case for AI in Control Systems

AI systems can rapidly analyse vast amounts of data coming in from sensors, whether it be historical data stored in a database or coming in live in real time. Those sensors may be recording not just the electrical performance of the control systems themselves , but also other events that, although tangentially related, have a real effect on the operation of the system. For example, in energy management on an electrical grid, AI can dynamically adjust power distribution and load control based on real-time demand, projected demand, the weather forecast, demographic analysis, and, of course, the status of the equipment itself. This can potentially be done much more efficiently than with a human operator, even if they were equipped with identical data feeds, and leads to much desired energy savings and increased reliability of the system.

AI also excels at predicting equipment failure before it occurs by recognising patterns in operational data. This predictive capability can prevent downtime in critical systems by, for example, replacing parts before they actually fail, and therefore saving money and maintaining service continuity. Such failure prediction may also generate valuable operational data over time for the replaced parts leading to an increase in the quality of manufacturing on knowing how and why they failed.

Anomaly detection is already a critical feature in many electrical systems and AI systems can quickly and efficiently monitor for unusual patterns or behaviours that might indicate a fault or a security breach, which is crucial in sectors like nuclear plants or aviation control, and not just for equipment failure as outlined previously.

Finally, in complex systems where conditions may change rapidly, AI can learn and adapt control strategies without human intervention, making responses to incidents or changes in the system much more rapid than was possible previously.

Lack of Transparency

The primary issue with deploying black box AI in control systems is the inability to understand why a particular action was taken. In a scenario where an AI decides to redirect power flow or alter traffic signals, for example, the reasoning behind these decisions must be transparent for accountability, safety, and to ensure the decision aligns with human-defined objectives.

This lack of transparency can become a significant hurdle in such control systems where decisions can have immediate and, potentially serious, real-world consequences.

When a decision is made by such a control system it’s often extremely difficult to uncover why exactly it was made. Indeed, because of the nature of some such systems, in similar circumstance a completely different decision may be made. In such cases not only is the model opaque by nature, but repeatability isn’t available to attempt to trace through the decision process in the usual black box manner.

Therefore, if the control system makes a decision that isn’t optimum or, worst case, actually results in a system error or collapse, then debugging the decision making process becomes completely intractable.

This is due to the fact that, unlike traditional software where logical pathways and flow of execution can easily be traced as process flow is deterministic by nature, AI made decisions are made as a result of thousands, or even millions, of complex interacting decision pathways. Even if it were possible to extract the data inside the model at the moment the decision were made, tracing the execution pathway quickly becomes an extremely complex, practically intractable problem.

Regulatory and Trust Issues

Control systems, especially in public infrastructure, are heavily regulated. The trust required for these systems to operate autonomously hinges on the ability to validate and explain decisions that AI control systems make. As was highlighted in the section regarding transparency in AI control systems, Regulatory bodies might not approve systems where the decision making process isn’t clearly understandable and therefore auditable.

This is also not to say that any control system that isn’t rigorously deterministic may be regarded as untrustworthy by both regulatory bodies and the public at large as there is no solid guarantee what it will do given a specific set of circumstances. Public trust plays a large part in the operation of public infrastructure and the negative publicity around an errant control system may have significant financial consequences and cause severe reputational damage.

Security Risks

Black box AI control systems can, by their nature, introduce vulnerabilities if the models can be manipulated or "tricked" into making incorrect decisions without clear visibility into how this manipulation occurs. In critical infrastructure, this could lead to catastrophic security breaches with the consequences already outlined under Trust Issues.

Where it would have taken a malicious actor to gain control over a previous system and manipulate it directly, which would be traceable through logs and audit trails, such an actor wishing to take over an AI control system may simply need to lead it down a different path by supplying deliberately misleading data without needing to understand the nuances of a deterministic system. After the fact, tracing such action is also extremely difficult due to the aforementioned transparency and debugging issues.

Human Oversight and Training

With an AI control system performing its day to day operations, an operator would need to be familiar with its normal decision making process in order to be able to intervene should its decisions become questionable or even, perhaps, dangerous. This isn’t a trivial exercise as, opposed to more conventional and deterministic control systems, the decision making process can be difficult to understand, unrepeatable under the same situation, and often completely opaque to the end user.

Training human staff therefore becomes much more challenging, and effectively reduces the human capacity to manage or override AI decisions when it may become necessary.

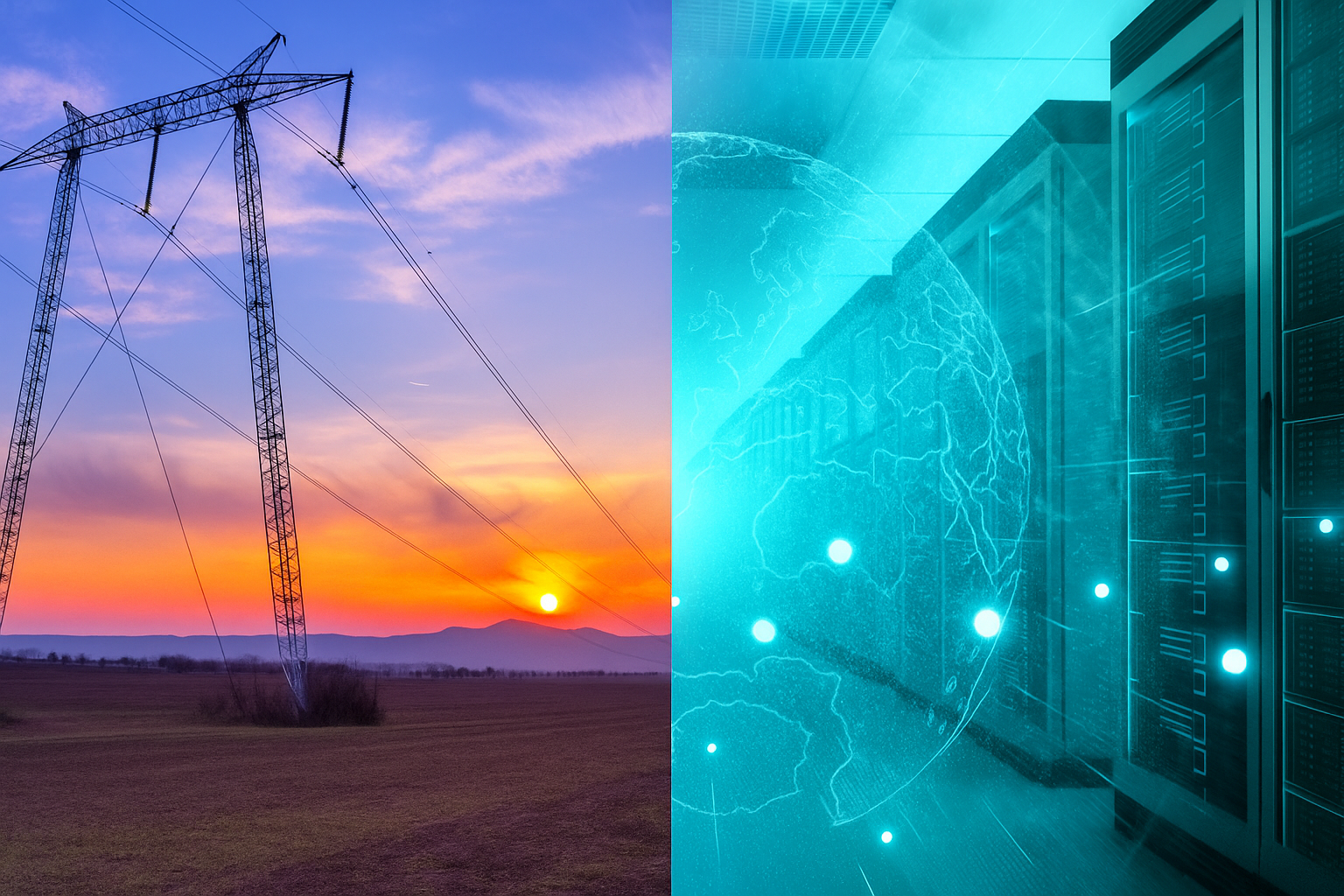

Systemic Failures

If AI systems are deployed in control environments without thorough testing or understanding, the consequences could be severe. For instance, an AI misjudging load distribution in an electrical grid could lead to blackouts, equipment damage, and significant financial penalties

Public and Stakeholder Backlash

Deploying untested or poorly understood AI in critical sectors could lead to significant public and stakeholder distrust, potentially slowing down AI adoption across other less critical sectors and generally lowering public confidence.

Legal and Ethical Dilemmas

When an AI control system makes a decision that leads to loss or damage, who is liable in that case? The lack of transparency could complicate legal accountability, creating ethical concerns about who or what should be responsible for the actions of the AI control system. This kind of situation could easily end up in costly litigation and reputational damage for involved parties.

Long Term Reliability

Over time, if AI systems fail or behave unpredictably, it could lead to a broader scepticism of AI technology, impacting innovation and investment in AI solutions, confidence in the companies supplying the technology, and hinder further deployment.

Mitigating the Risks

To harness the benefits of AI in control systems whilst mitigating the outlined risks may be achieved in the following ways.

Develop Explainable AI (XAI)

Research into AI technologies that can explain decisions in human understandable terms is crucial. This would also allow for better integration into control systems where decisions need human validation. It therefore makes a compelling case for the development of systems that fully document how the AI control system operates, how and why it made the decisions it did, and to provide a comprehensive audit trail.

Hybrid Systems

Systems could be developed that take a “best of both worlds” approach, hybrid technologies where AI control systems work alongside traditional control logic, thus allowing for a fallback to well understood processes if AI system fails or behaves unexpectedly.

Simulation and Testing

Extensive simulation in controlled environments can help understand AI behaviour under various scenarios before real-world deployment. Although this can never be totally comprehensive, it would offer demonstrable use cases and help to increase confidence of the system in specific cases.

Regulatory Frameworks

Standards could be developed and legislated for the operation of AI in control systems that include requirements for transparency, safety, and accountability. Systems not following these standards would not be allowed to be integrated into specific cases such as critical public electrical grid infrastructure.

Continuous Monitoring and Learning

It is vital that continual monitoring is enabled for any deploy AI control system along with mechanisms that learn from what decisions were made under what specific circumstances. This would help to increase both confidence and capability in the systems.

Conclusion

While AI presents transformative opportunities for new and innovative control systems, the black box nature of many advanced AI models poses significant challenges to their adoption in public, and especially critical, infrastructure.

The rush to deploy such technologies without addressing the issues outlined in this article may lead to setbacks not only in the immediate adoption of such technologies, but also in the broader acceptance of AI control systems.

Balancing the drive for innovation with the need for accountability, safety, and trust will be key to the future integration of AI in modern control systems.