The Data Deluge Problem

Sensors, IoT devices, distributed applications, and security systems generate terabytes of logs every day. This isn’t just an abstract problem, it’s crippling in a very practical sense. The sheer volume of logs can overwhelms storage, inflate costs, and make manual analysis almost laughably impossible.You could store everything (a tempting but expensive option) or rely on humans to sift through it all (a slow and error-prone process).Or, you could do what the smart money is attempting to do, use AI.

Enter AI-Powered Log Analysis

AI doesn’t get bored as easily as a software engineer.

Neither does it suffer from alert fatigue, like a software engineer woken up at 2am with yet another alert from production, nor does it stare blankly at a screen at 3 AM wondering why a database transaction is failing.

Instead, it methodically chews through every byte of telemetry, detecting patterns, spotting anomalies, and surfacing critical insights before a human even realises that something might be wrong.

1: Anomaly Detection: Spotting the Strange Before It’s Catastrophic

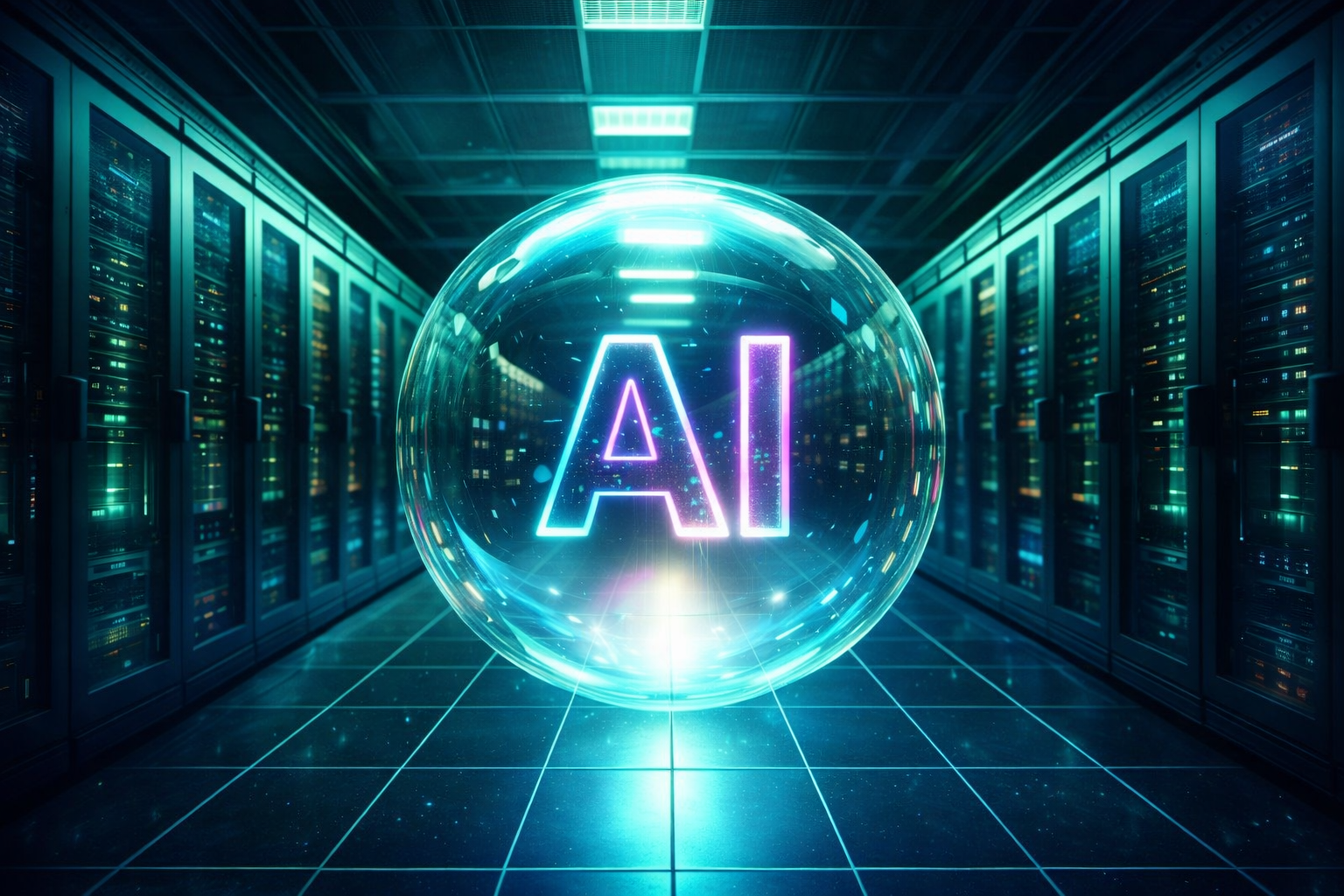

Anomaly detection is where AI can really shine. Instead of relying on static rules ("if X happens, alert someone"), machine learning dynamically learns what "normal" looks like in your logs. When something strays from the norm, like a sudden spike in failed authentication attempts or an unexpected drop in request volume, AI can flag it instantly.

Consider an IoT-based manufacturing system. A typical sensor might generate thousands of data points per minute, tracking everything from temperature to pressure levels. A human operator? You’re lucky if they can catch a critical failure before it escalates. An AI model, however, detects subtle shifts in patterns—say, an imperceptible but growing temperature fluctuation—that could signal impending hardware failure before disaster strikes.

Not all anomalies are catastrophic, however. Some are just weird, but interesting. AI excels at identifying meaningful patterns that might escape human intuition.

Take log data from a banking application—certain transactions might correlate with fraud, but the relationship is buried beneath layers of seemingly unrelated log events. AI finds those connections, surfacing hidden insights that even experienced analysts might miss.

Every log file is commonly bloated with useless noise—repeated messages, routine background processes, and system-level chatter. AI driven log aggregation tools automatically filter and summarise logs, ensuring that only the most relevant data gets stored or escalated.

This isn’t theoretical, it’s already happening. Platform technologies such as Adaptive Telemetry classify logs based on actual usage, dynamically prioritising high-value telemetry and discarding redundant data.

The result? Massive cost savings and reduced cognitive load on engineers.

Real World Applications

Cybersecurity: Hunting for Stealthy Threats

Security logs are an absolute goldmine, if you can extract the right insights. AI driven threat detection systems process logs from firewalls, authentication servers, and network traffic to spot threats in real-time. Unlike rule based approaches that rely on predefined signatures, AI detects previously unknown attack vectors by identifying suspicious behavioural patterns.

For example, brute force login attempts might follow a predictable pattern. But what if an attacker spreads attempts across multiple IPs in a slow, coordinated attack?

A human might not see it. AI does.

Cloud Infrastructure Monitoring: Keeping Systems Healthy

Cloud environments generate petabytes of log data. AI-powered observability tools monitor infrastructure logs, detecting performance degradation before it impacts users. Imagine an AI model tracking server response times across a Kubernetes cluster.

If response times start fluctuating in a way that correlates with CPU spikes or memory leaks, the AI flags it before end users even notice a slowdown.

Predictive Maintenance: Fixing Before Breaking

Cloud environments generate petabytes of log data. AI-powered observability tools monitor infrastructure logs, detecting performance degradation before it impacts users. Imagine an AI model tracking server response times across a Kubernetes cluster.

If response times start fluctuating in a way that correlates with CPU spikes or memory leaks, the AI flags it before end users even notice a slowdown.

The Future: AI Driven Autonomous Systems

Where is this all heading? AI’s ability to process and interpret logs is rapidly evolving towards autonomous decision-making. Future systems won’t just detect anomalies, they’ll act on them. A security AI won’t just flag an intrusion attempt, it will dynamically adjust firewall rules in real-time. A cloud observability AI won’t just highlight a failing container, it will automatically spin up a new instance to handle the load.

We’re heading toward a future where human intervention in log analysis is optional, not required.

Final Thoughts: AI Is Not Just an Option, it’s becoming a Necessity

The scale of today’s log data isn’t just "big", it’s incomprehensible to humans. AI doesn’t just help manage the flood; it turns it into actionable insights.

By detecting anomalies, uncovering patterns, reducing noise, and ultimately automating response mechanisms, AI transforms logs from an overwhelming burden into a critical asset.

The question isn’t whether AI will be used for log analysis, it’s whether your organisation can afford to operate without it. Because in a world drowning in data, those who can find the signal in the noise first will win.